The "Friend Finding" Feature No One Asked For: OpenAI’s Pivot to Surveillance Capitalism

How the February 2026 privacy update transforms ChatGPT from a tool into a tracking ecosystem.

It arrived in my inbox at 8:00 PM on Valentine's Day—a cynical timeslot often reserved for news companies hoping you won't notice. The subject line was innocuous enough: "Updates to OpenAI's Privacy Policy".

It framed the changes as a benevolent exercise in transparency, claiming the update was designed to "give you more information about what data we collect" and "how you can control it". It highlighted three seemingly helpful pillars: a new feature for "Finding friends on OpenAI services" , "Age prediction" mechanisms to provide "safeguards for teens" , and details on "New tools" like the Atlas browser and Sora 2.

On the surface, it reads like a standard housekeeping update for a scaling tech company. But if you ignore the friendly summary and conduct a forensic line-by-line comparison of the new 28-page policy against the archived 2024 version, a different story emerges.

This is textbook "Enshittification" of the platform. OpenAI is no longer content with being a tool you use; they are pivoting to a model based on Surveillance Capitalism. The era of the passive helper and confidant is over. The era of the active extraction of your "behavioral surplus"—data you didn't ask to give, used for purposes you didn't agree to—has officially begun.

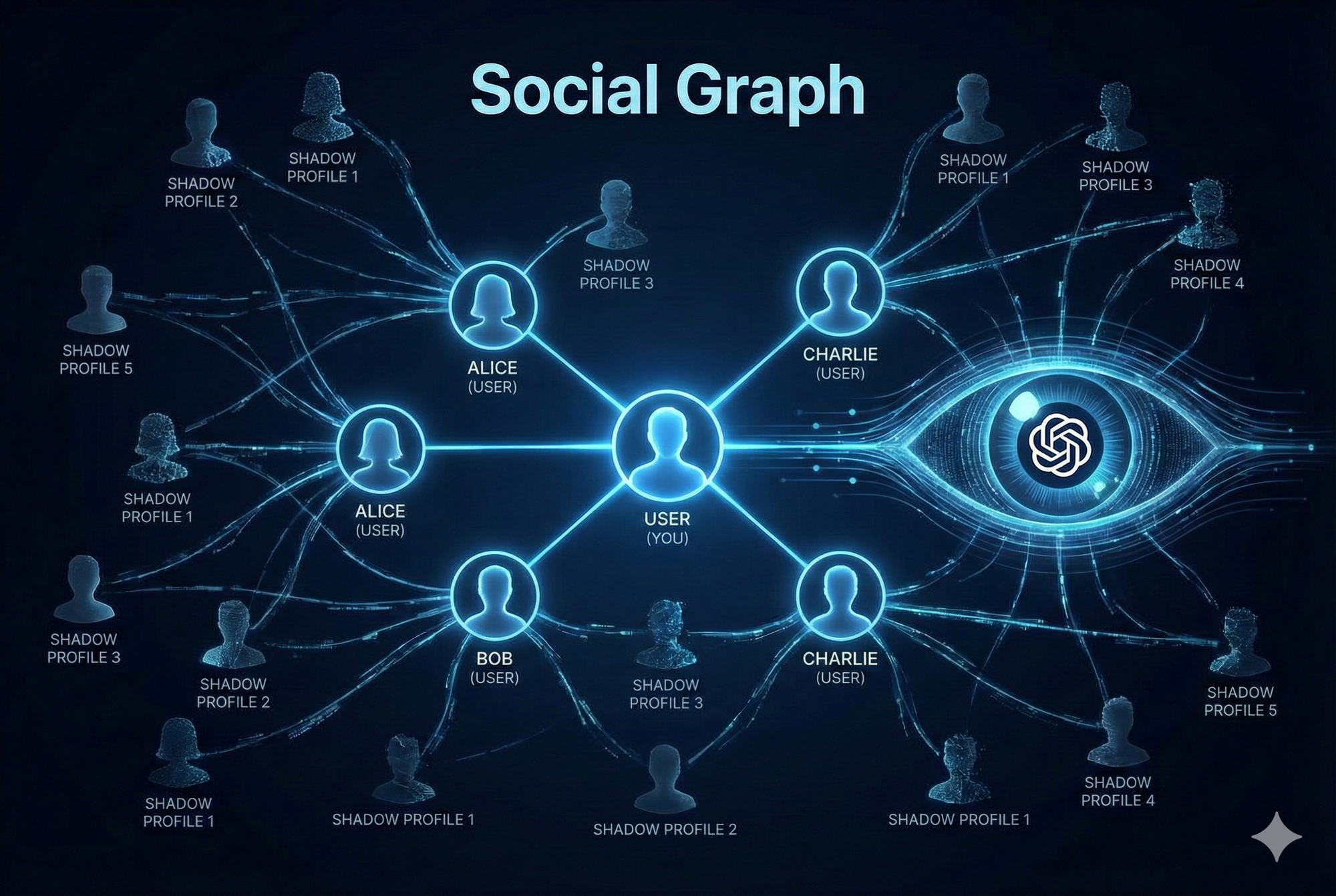

The Social Graph (Mining Your Relationships)

The email sells this as a warm, community-building update: "You can now choose to sync your contacts to see who else is using our services." It’s framed as a convenience feature; a way to find your friends in the lonely void of an AI chat window. If you turn to Section 1 (Personal Data we collect) of the new policy, the language shifts from "finding friends" to data ingestion. The policy explicitly states:

"We upload information from your device address books and check which of your contacts also use our Services."

But the 'devil is in the detail' of who they represent. The policy openly admits to processing the contact information of "people who don't use our Services".

The "Behavioral Surplus" Angle: Why does it need your address book? It doesn’t. But an advertising platform does.

This is the foundational step in building a Social Graph, the most valuable asset in the digital ad economy. By convincing you to "sync contacts," OpenAI isn't just looking for your friends; they are building Shadow Profiles of everyone you know. Even if your colleague Alice has never signed up for ChatGPT, if you sync your contacts, OpenAI now knows Alice’s phone number, email, and crucially, that she is connected to you.

This is a textbook application of Shoshana Zuboff’s Surveillance Capitalism. The "friend finding" feature extracts data you didn't need to give (your entire network) to generate a "prediction product" they can sell: a map of who knows whom. In industry, this is often called "Lookalike Modelling". If you are a high-value user, your friends likely are too. By mapping your connections, they will be able to target ads to your network before those people even sign up.

The "Atlas" Browser (Tracking Your Attention)

Under the vague heading "New tools and features," the email casually mentions they have"added details about Atlas". It sounds like a benign software update, or perhaps a new interface or a helpful Chrome extension to make your workflow smoother.

The 2026 privacy policy reveals that Atlas isn't just a tool; it is a surveillance engine. In 2024, OpenAI’s "Log Data" was limited to the standard debris of the internet: your IP address and browser type. In 2026, the definition of "Usage Data" has expanded to include a new category. The policy states: "If you use the Atlas browser we may also collect your browser data according to your controls".

What is "browser data"? It is the record of your digital consciousness.

The "Incognito" Trap: Sometimes it's more interesting to see what isn't said rather than what is. The policy doesn't explicitly say about the default settings. It reassures users that "your Atlas incognito browsing history won't be saved after you end your session".

This is known as a Negative Confirmation. By specifying that incognito history is deleted, they are confirming that standard browsing history is retained. Unless you actively toggle a privacy switch every time you log in, OpenAI is now recording not just what you say to the AI, but every website you visit, every article you read, and every product you consider buying while using their browser.

This marks OpenAI's strategic pivot from a Destination to Middleware.

- Old Model: You went to ChatGPT.com to ask a question. They owned the answer.

- New Model: You use Atlas to browse the web. They own the journey.

By inserting themselves between you and the internet, they are capturing your Clickstream Data, the single most predictive dataset for consumer behaviour. They aren't just monetising the chat anymore; they are monitoring your attention across the entire web. This is the raw fuel required for the "search and shopping providers" they have quietly added to their list of data partners.

Inference as a Product (Predicting Your Identity)

The email frames this update as a moral crusade for child safety. Under the heading "Age prediction & safeguards for teens," OpenAI claims they are simply using these tools "to help provide safer, more age-appropriate experiences." It is the classic "Think of the Children" defence, a shield so virtuous that to question it feels almost deviant.

In reality, you are left with a mechanism for Biometric Inference.

The policy states they process data "to estimate your age". This is fundamentally different from verifying your age. Verification is checking a government ID (a binary yes/no). Estimation is an invasive, continuous analysis of your behaviour.

According to OpenAI's own technical disclosures (often buried in "Safety" blog posts rather than the main policy), this model analyses "behavioural and account-level signals". In plain English, they are watching how you type, the vocabulary you use, the complexity of your sentence structures, and the time of day you are active.

This is the extraction of Behavioural Surplus in its purest form. You didn't ask OpenAI to analyse your syntax to guess if you are 16 or 46. You asked it to debug code or 'assist' you with your assignment. They are taking the byproduct of that interaction (your behavioural "exhaust") and turning it into a prediction product.

The Danger of the "Teen" Tag: If an AI can infer you are a "teen" based on your anxiety levels, typing speed, and slang, it can infer almost anything else.

- Mental Health: Depression, neurodivergence, or insomnia.

- Status: Employment status or financial desperation.

- Politics: Ideological leanings based on prompt framing.

Once these attributes are inferred, they stop being "safety features" and become targeting parameters. This connects directly to the new "Disclosure of Personal Data" section, which now includes "Search and Shopping providers". They aren't just protecting teens; they are segmenting users. A "Teen" label is a safety flag for a parent, but it is a "Demographic Segment" for an advertiser.

The 2026 policy grants them the right to guess who you are, even if you refuse to tell them.

The Commercial Pivot (The "Shopping" Leak)

The email wraps up with a promise of "More transparency around data". It sounds like a victory for user rights, a benevolent corporation finally explaining "how long we keep data" and the "legal bases we rely on." It invites you to feel in control.

In reality, "transparency" is often a legal synonym for "disclosure of new commercial partners."

If you scroll deep into the "Disclosure of Personal Data" section of the 2026 policy, you will find a quiet but important addition. In 2024, OpenAI shared data with "service providers" (hosting, support). In 2026, they explicitly list"Search and Shopping providers" as a distinct category of third-party recipients.

This isn't a random addition. It provides the legal infrastructure for OpenAI’s recent announcement that they are "Testing Ads in ChatGPT". When you view the ecosystem as a whole, the strategy becomes undeniable. It reveals a closed-loop ad platform:

- Collect (Section 1 & 2): They map your social graph (Contact Sync) and track your intent (Atlas Browser).

- Infer (Section 3): They predict your demographics and vulnerabilities (Age Prediction).

- Monetize (Section 4): They feed this high-fidelity profile to "Shopping providers" to serve you targeted results.

The "Transparency" claimed in the email is not about empowering you; it is about immunising them. By disclosing these partners now, they are legally clearing the path to turn your private conversations into a marketplace. The product you are using is no longer a research preview; you the 'consumer' are being consumed.

SDG 12 & The Death of the Tool

If we view this update through the lens of the UN Sustainable Development Goals (SDGs), specifically SDG 12 (Responsible Consumption and Production), are we able to question OpenAI in a test of ethical sustainability.

Sustainable business is not just about carbon footprints; it is about transparency. A company that relies on obscuring its business model to maintain user trust is, by definition, operating unsustainably. When a "privacy" update is used to mask a massive data-harvesting operation, hiding social graph mapping behind "finding friends" and surveillance behind "safety"; does it not violate the fundamental right to informed decision-making (a core tenet of SDG 16.10).

To what extent should the buyer beware? To what extent should users start to take responsibility for their own online behaviours in the same way they are expected to offline? Has education in this space truly failed?

Who care's its just for the FREE VERSION

There is nothing in the February 2026 privacy policy to suggest that paying for a personal subscription (ChatGPT Plus or Pro) exempts you from this new data collection. In fact, the evidence suggests the opposite: You are paying twice. Once with your wallet, and again with your data.

The "Plus" Trap (Individual Subscriptions) The policy explicitly mentions "ChatGPT Plus or Pro" users in the Retention section. This confirms that individual paid accounts are governed by this specific policy, the same one that authorises:

- Contact Syncing (Social Graphing)

- Atlas Browser Tracking

- Age Prediction Inference

There is no clause that says "If you are a subscriber, we do not collect this data." The only benefit you likely get is that they don't show you the ads; they still harvest the data to build the ad models for everyone else. The only users who are safe from this surveillance are those on "Business Offerings" (specifically API and Enterprise). The policy states explicitly: "This Privacy Policy does not apply to content that we process on behalf of customers of our business offerings, such as our API." If you are a corporate customer (ChatGPT Enterprise), you have a separate contract. If you are a human being with a credit card (ChatGPT Plus), you are fair game.

This only reinforces the "Enshittification". Privacy is now a luxury good, but even paying £20/month for "Plus" doesn't buy it. It only buys you a cleaner interface while the surveillance engine hums quietly in the background.

We are witnessing the death of the "Tool" and the birth of the "Broker."

In 2024, ChatGPT was a utility. You paid (with money or attention) to use a service. In 2026, the service is a lure. The real transaction is happening backstage, where your relationships, your browsing history, and your inferred identity are being packaged for a new set of partners.

The email wasn't an update. It was a warning. The product has changed. And the new Privacy Policy isn't a contract to protect your rights; it is the Terms of Surrender for your digital life.

Join our newsletter

We share our insights on Marketing, Education, and anything else that takes our fancy. No spam. Unsubscribe anytime.

No spam. Unsubscribe anytime.